Neural rendering is a groundbreaking method that allows an image synthesis that blends the strengths of traditional computer graphics with the intelligence of machine learning. Unlike common/traditional rendering techniques that simulate physical phenomena using predefined rules, neural rendering allows machines to learn how the world looks, enabling them to generate visuals by recognizing patterns, textures, and lighting from massive datasets. Read the article to explore a technology which is probably changing or even replacing the process of creating 3D images and videos!

What Is Neural Rendering and Why It Matters.

Neural rendering is a transformative technique that is revolutionizing how 3D content is created and experienced on the web. From hyper-realistic virtual clothing try-ons to interactive 3D tours, neural rendering offers a noticeable level of realism, adaptability, and interactivity in real time, making digital experiences more immersive.

How Neural Rendering Evolved from Classic Techniques

Before AI entered the scene, rendering involved labor-intensive modeling, lighting setup, and extensive computational time. Traditional pipelines required artists and developers to manually configure every visual element, which often led to static scenes and long rendering times.

Neural rendering, however, removes much of that "obstacle" but it's worth mentioning that many artists still feel much more comfortable with the traditional way, more creative and most importantly, much more precise.

By learning from datasets—images, 3D scans, or real-world videos—these systems infer depth, texture, and lighting automatically. The result is an efficient process that not only speeds up visual generation but also unlocks dynamic and interactive content with little manual intervention.

The video below provides a comprehensive breakdown of how rendering has evolved, starting from classic methods like ray tracing and rasterization to more recent developments using machine learning. It explains both forward rendering (generating images from 3D data) and inverse rendering (reconstructing 3D scenes from 2D images). Importantly, the speaker demonstrates how neural networks can be used to speed up rendering, denoise images, and enable real-time inference by learning efficient rendering policies. You’ll also see how neural rendering integrates into both traditional and differentiable rendering pipelines, paving the way for dynamic, data-driven visuals.

A Look Inside How Neural Rendering Works.

At its core, neural rendering involves training a deep learning model using input data such as 2D images, depth maps, or point clouds. This training process teaches the neural network how to “see” and interpret 3D geometry, textures, and lighting conditions.

Once trained, the system can then generate or infer new visual outputs from different angles, lighting setups, or interactions. This inference happens in real time, allowing users to experience content that adjusts dynamically to their input. If you're working with high-resolution renders or complex AI workflows, using a cloud render farm like RebusFarm can drastically reduce local hardware strain and speed up experimentation.

Key Technologies Behind Neural Rendering.

To truly understand the potential of neural rendering, it's essential to look at the key technologies driving its innovation. These foundational AI methods allow systems to synthesize realistic visuals, adapt to lighting changes, generate unseen perspectives, and apply fine surface details with impressive accuracy. Here’s a breakdown of the core technologies transforming how 3D content is rendered:

- Neural Radiance Fields (NeRFs)

NeRFs are one of the most exciting developments in the world of neural rendering. They allow AI systems to synthesize new views of a scene using only a few 2D reference images. By modeling how light radiates through space, NeRFs reconstruct detailed 3D scenes with photorealistic lighting, shadows, and reflections. This technique is especially useful for applications like virtual walkthroughs and novel view synthesis in both web and AR/VR environments.

The video below offers an accessible introduction to Neural Radiance Fields (NeRFs)—a method that uses deep learning to generate novel views of a scene from just a few input photographs. Instead of relying on traditional 3D meshes or voxel grids, NeRF fits a neural network to represent a scene as a continuous volumetric function. By querying the network with coordinates and viewing directions, it can render photo-realistic images from any angle, capturing fine lighting effects, reflections, and even transparency. The presenter walks through how NeRF achieves this through differentiable volume rendering and positional encoding, illustrating its power with both synthetic and real-world examples. - Generative Adversarial Networks (GANs)

GANs consist of two neural networks, a generator and a discriminator, that compete with each other to produce increasingly convincing visual content. In neural rendering, GANs are often used to generate or upscale high-resolution textures, fill in missing details, or stylize content. They’re particularly effective in refining the realism of rendered surfaces or environments, helping bridge the gap between raw output and polished, production-ready visuals.

The video below explains in simple words what GANs are: - Diffusion Models

These models work by starting with a field of random noise and gradually denoising it to form a structured image. In the context of rendering, diffusion models enable high-quality synthesis of images and animations with intricate textures and lighting. They have rapidly gained popularity due to their stability and ability to produce consistent, high-fidelity results, especially in tasks like generative image creation and motion interpolation.

For a deeper explanation with nice and easy to understand examples follows below: - Neural Textures

Neural textures are AI-generated, image-like patterns that can be applied directly to 3D models. Unlike traditional textures, which are static, neural textures are dynamic and learnable as they adapt based on lighting conditions, viewing angles, or scene context. This flexibility allows for more realistic material rendering, such as shimmering fabrics, aging surfaces, or even animated skin pores, greatly enhancing the immersion of 3D environments.

A perfect example for this important part, is a video that was published by NVidia a few months ago showcasing NVIDIA's RTX Kit, a cutting-edge developer toolkit that combines real-time AI rendering technologies with the power of GeForce RTX™ 50 Series GPUs. Designed for next-gen path-traced games and applications, RTX Kit includes features like RTX Neural Materials for cinematic material rendering, RTX Neural Faces for lifelike real-time facial animation, DLSS 4 for up to 8x higher frame rates, and RTX Mega Geometry, which enables up to 100x more ray-traced detail. These innovations are brought to life in Zorah, a stunning tech demo created with Unreal Engine 5, offering a glimpse into the future of photorealistic, AI-powered 3D worlds.

Tools and Frameworks for Developers.

As neural rendering gains traction across industries, developers have a growing number of tools at their disposal to integrate these capabilities into their workflows.

One of the most prominent is NVIDIA Instant-NGP (Neural Graphics Primitives), which drastically reduces the time needed to train and render Neural Radiance Fields (NeRFs). Designed with real-time performance in mind, it allows for fast experimentation and deployment, making high-quality neural rendering more accessible than ever.

For web developers, Three-NeRF extends the popular Three.js framework by enabling NeRF-based rendering directly in the browser. This makes it possible to create immersive, interactive 3D scenes that can run efficiently without the need for heavy client-side software or plugins and it is perfect for online product previews, virtual tours, and more.

Meanwhile, core machine learning libraries like TensorFlow.js and PyTorch3D serve as powerful backbones for custom neural rendering implementations. TensorFlow.js enables browser-based AI models written in JavaScript, while PyTorch3D offers robust Python tools for building and training neural networks on 3D data. Both frameworks empower developers to experiment with advanced rendering techniques, from training differentiable models to deploying real-time inference engines.

Real-Time Visual Generation with AI.

Traditionally, rendering was a precomputed process and that means images that were “baked” before users interacted with them. Neural rendering introduces real-time inference, allowing visuals to be computed on the fly.

This leap in responsiveness has massive implications for web experiences. For example:

- Product previews that adjust instantly to a user's actions

- Virtual avatars that respond to speech or gestures

- Architectural walkthroughs that update lighting and shadows in real time

Real-World Use Cases on the Web.

Neural rendering is no longer confined to research labs or high-end production studios—it's actively shaping how we interact with products, spaces, and stories online. Here's how different industries are already benefiting from its real-time power and adaptability:

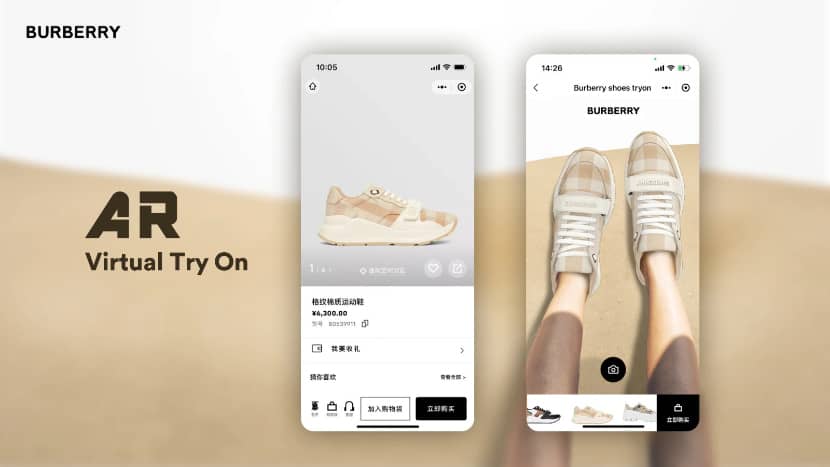

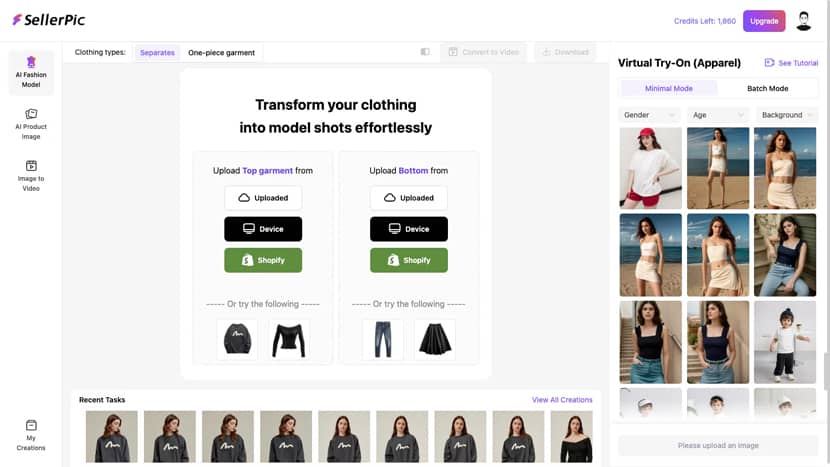

- In e-commerce, AI-powered virtual try-ons allow users to see how clothing, shoes, or accessories would look on different body types or under various lighting conditions, enhancing personalization and boosting buyer confidence.

- In real estate, potential buyers can immerse themselves in interactive 3D walkthroughs of homes, complete with dynamic lighting and realistic materials, giving them a sense of the space long before setting foot inside.

- In entertainment and media, neural rendering enables environments and characters to adapt in real time to user interactions, opening the door to next-level interactive storytelling.

Shopify’s virtual try-on experience leverages advanced AI technologies, like SellerPic and Kivisense, to let shoppers preview how shoes, bags, and clothing would look on them in real time. Whether through uploaded photos or live camera feeds, these tools bring a new level of personalization and realism to online shopping.

Web Industries Benefiting from Neural Rendering.

Neural rendering isn’t just for 3D artists, it’s transforming a variety of sectors and we briefly mention some of them below:

- Retail: Personalized product showcases and real-time customization options.

- Real Estate: Dynamic, interactive 3D tours without bulky software downloads.

- Education: Interactive diagrams that explain complex scientific phenomena in 3D.

- Journalism: Scroll-triggered visuals that adjust as stories unfold.

- Design & Development: On-the-fly previews of layouts and textures that change with user settings.

This video, hosted by Oliver Emberton, CEO of Silktide, presents a comprehensive and entertaining exploration of how generative AI is revolutionizing web design. Through real-world examples and rapid-fire demonstrations, Emberton shows how AI tools can now assist with everything from creating user personas and drafting marketing copy to generating page layouts, logos, and even usability testing. He highlights both specialized and general-purpose AI platforms, like ChatGPT, Midjourney, and UIzard, and outlines how these tools are dramatically reducing design time while expanding creative possibilities. The talk closes with a forward-looking take on the next 20 years of AI, predicting breakthroughs in speed, accuracy, and creative autonomy that could redefine digital creation as we know it.

Key Challenges and Developer Considerations.

While the possibilities are exciting, developers face technical and ethical hurdles.

Technical and Ethical Hurdles.

- Performance Strain: Browser-based neural rendering can tax local GPUs and strain memory, especially on mobile.

- Edge AI Solutions: Offloading rendering to the edge can help balance performance, speed, and device compatibility.

- Model Maintenance: Constant updates are needed as new data becomes available or content needs to adapt.

- Ethical Issues: Using real-world data, especially facial scans or locations, raises privacy concerns. Developers must implement data protection measures and transparency in AI usage.

To futureproof their systems, developers should adopt modular architectures, include fallback rendering options, and build with progressive enhancement in mind.

The Future of Neural Rendering in Web Development & 3D Art.

The future of neural rendering is unfolding rapidly, redefining both the visual quality and creative workflow of 3D art and web experiences. Once confined to academic labs and research-heavy pipelines, these AI-driven techniques are now becoming powerful tools in the hands of artists, designers, and developers alike.

On the web development front, innovations like WebGPU, Three.js, and WebXR are merging with neural AI to deliver real-time, cinematic 3D graphics directly in the browser. These advances are enabling everything from interactive product configurators to immersive online exhibitions and all without heavy plugins or game engines.

In the 3D art world, we’re seeing a hybridization of traditional rendering engines (such as Unreal Engine or Unity) with neural rendering modules. This fusion allows for smarter, faster visualizations where materials, lighting, and animations can adapt dynamically based on learned data, reducing both time and manual effort.

Perhaps most exciting is the democratization of these tools. Thanks to open-source neural rendering libraries and user-friendly APIs, even small studios and solo creators can now produce results that were once reserved for top-tier R&D teams. The barrier to entry continues to shrink, and the creative ceiling continues to rise.

With just a few lines of code, or a single well-trained neural model, developers and digital artists alike can craft intelligent, responsive, and visually stunning experiences that redefine what’s possible on screen.

Nevertheless, we have yet to uncover all the potential downsides of this technology, and its full evolution will undoubtedly introduce new challenges to our industry. Only time will reveal whether the advantages will ultimately outweigh the disadvantages and whether this transformation will be as smooth as we hope.

Frequently Asked Questions.

How does rendering work in digital graphics?

Rendering transforms 3D models into 2D images. Traditional rendering simulates light physics, while neural rendering learns from real-world data to produce visual outputs.

What role does a single neuron play in an AI model?

Each neuron processes and passes signals, helping the network recognize patterns, similar to how a human brain interprets visuals.

Why are neural networks important for the advancement of AI technology?

Neural networks mimic how humans learn and adapt, enabling machines to recognize images, predict patterns, and generate content more intelligently.

What programming language is most widely used in AI development today?

Python remains the top choice due to its rich libraries like TensorFlow, PyTorch, and Keras, making it ideal for AI, ML, and neural rendering development.

Does neural rendering pose any privacy risks for users?

Yes, and especially when using real images or personal data. Ethical implementation requires transparency, consent, and strict data handling protocols.

What’s the best way to begin working with neural rendering in web development?

Start with tools like Three.js, TensorFlow.js, or Three-NeRF, and explore beginner-friendly models using open datasets. Online courses and GitHub repositories offer excellent starting points.

Final Thoughts.

This article is based on editorial insights and curated research inspired by recent breakthroughs in neural rendering. For video demonstrations, research tools, and ongoing tutorials, you can also follow the YouTube creators featured above or explore NVIDIA’s and Google’s open-source neural rendering initiatives.

I began exploring neural rendering about two years ago, curious to understand what it is and how it works. During this casual study, I realized that it wasn’t drastically different from the research I had done earlier (20+ years ago) while trying to grasp how traditional rendering engines interpret light and materials, how cameras behave, and the foundational principles behind photorealism.

Neural rendering is not just a trend, it’s a paradigm shift in how we create, share, and experience visuals online. Its real-time power enables a new generation of web experiences that are immersive, interactive, and deeply personal. Whether you’re a designer, developer, or digital storyteller, now is the time to explore the possibilities of neural rendering because it seems to be the future.

We hope that this article helped you with the subject and term of neural rendering and that it also inspired you to take your next learning step into the world of 3D data.

Kind regards & Keep rendering! 🧡

About the author

Vasilis Koutlis, the founder of VWArtclub, was born in Athens in 1979. After studying furniture design and decoration, he started dedicating himself to 3D art in 2002. In 2012, the idea of VWArtclub was born: an active 3D community that has grown over the last 12 years into one of the largest online 3D communities worldwide, with over 160 thousand members. He acquired partners worldwide, and various collaborators trusted him with their ideas as he rewarded them with his consistent state-of-the-art services. Not a moment goes by without him thinking of a beautiful image; thus, he is never concerned with time but only with the design's quality.